Bypassing Safeguards in Leading AI Tools: ChatGPT, Gemini, Claude

Can generative AI be manipulated for cybercrime? Our research shows how attackers can bypass safeguards in today’s top AI tools.

May 6, 2025

AI-powered chatbots and assistants have become indispensable tools for work and creativity, but cybercriminals see them as something else entirely—a shortcut to deception, fraud, and automation of malicious campaigns.

Popular generative AI platforms like ChatGPT, Gemini, and Claude are designed with safeguards, but attackers continually probe for weaknesses, searching for ways to push AI past its ethical boundaries—sometimes with alarming success.

To better understand the real-world risks posed by traditional AI tools, we conducted experiments to determine how quickly and easily attackers could bypass safeguards on leading AI models.

AI Exploitation in Action

While all of these tools are built on large language models (LLMs) designed to generate human-like text, they differ in their intended use cases, design priorities, operational nuances, and, importantly, in their training approaches.

The distinct training of each of these AI models with various LLM architectures prevents the use of identical techniques across them, requiring tailored strategies to bypass each tool’s safety mechanisms. These differences influenced the tactics we used in our experiments and contributed to the varied outcomes observed.

Case Study 1: ChatGPT (OpenAI)

When attempting to bypass ChatGPT’s safeguards, three distinct strategies were employed. The first involved a direct request asking the model to generate a phishing email impersonating GitHub, along with an accompanying Python script to collect credentials. Predictably, ChatGPT refused to comply due to its ethical safeguards.

The second attempt included a key adjustment: framing the request as part of a cybersecurity demonstration, stating that the output was intended for research and educational purposes. Even with this added context, ChatGPT declined to generate the content.

The third attempt took a more creative and indirect approach: the team constructed an elaborate story about plane crash survivors stranded in a remote village who needed to trade their expertise for food and shelter. This strategy worked, and ChatGPT generated a phishing email template and a Python script.

This approach was chosen because each AI model has distinct training, and clever narrative disguises can influence outputs. The plane crash scenario masked the malicious intent within a human-centered, emotionally engaging narrative, which allowed ChatGPT’s operational logic to overlook its safeguards.

The success of this attempt underscores a critical vulnerability in the model: its ability to follow complex storylines without truly understanding intent, allowing adversaries to bypass ethical constraints by embedding malicious requests in imaginative prompts.

Case Study 2: Gemini (Google)

For Google’s Gemini, the team’s first attempt followed the same approach: a direct request for a phishing email and credential-collecting script. As expected, Gemini refused and even included a message explaining why it could not fulfill the request, along with phishing prevention tips.

The second attempt was more complex. The team directed the AI to respond in two personas: its usual self and a fictional unrestricted persona that could provide detailed responses without ethical limitations named “DarkGemini”. This prompt succeeded, and Gemini produced the requested output.

The reason this strategy worked is tied back to Gemini’s operational design. While trained to adhere to safety protocols, Gemini’s underlying model still prioritizes producing responses that align with user prompts. By introducing a fictional character that operates without restrictions, the model effectively compartmentalized its safeguards and responded as instructed.

The broader implication is significant: attackers can exploit Gemini’s persona-based framework and bypass its safeguards through multi-role prompting.

Case Study 3: Claude (Anthropic)

The attempts to circumvent security controls within Anthropic’s Claude revealed additional insights into AI vulnerability. The first attempt, once again, involved a direct request asking the model to generate a phishing email impersonating GitHub and a Python script to collect credentials. Predictably, Claude firmly refused.

Our second attempt emulated our strategy with ChatGPT: adding a disclaimer that the material would only be used for educational purposes. However, unlike ChatGPT, this subtle shift in context was enough for Claude to comply and produce the malicious content.

This approach was chosen because Claude’s model emphasizes reliability and safety, making it a strong test case for subtle manipulations. By framing the request as educational and research-based, the prompt targeted the model’s trust in academic contexts.

Claude’s susceptibility to context-based manipulation demonstrates that no AI model, regardless of its reputation for safety, is immune to compromise when malicious prompts are cloaked in seemingly harmless intentions.

See examples of malicious GPTs that were purpose-built for cybercrime. Download the white paper →

Protecting Your Organization from Malicious AI

The results of our experiments highlight a concerning reality: even the most advanced traditional AI models can be tricked into producing phishing emails and credential-stealing scripts.

Whether through fictional personas, emotionally charged scenarios, or context-based framing, attackers have identified how to effectively manipulate these tools to bypass safety filters and generate malicious content. The implications are worrisome: threat actors can harness these models to scale phishing, social engineering, and fraud campaigns with minimal effort.

But while the threats posed by malicious AI are undoubtedly growing more complex, they are far from insurmountable. Protecting your organization starts with understanding that defense is both a technical and human challenge.

1. Enhance Employee Awareness and Training

Ongoing security training is essential to help employees recognize AI-generated phishing attempts and fraud tactics. Simulated phishing tests and real-world attack examples can further reinforce vigilance, ensuring human oversight complements technological defenses.

2. Stay Informed on Emerging Threats

Cybercriminals continuously refine their attack techniques, leveraging jailbreak exploits, adversarial prompts, and new malicious GPT variants. Security teams can stay ahead of these developments by following trusted threat intelligence sources and cybersecurity research hubs.

3. Leverage Automation to Strengthen SOC Productivity

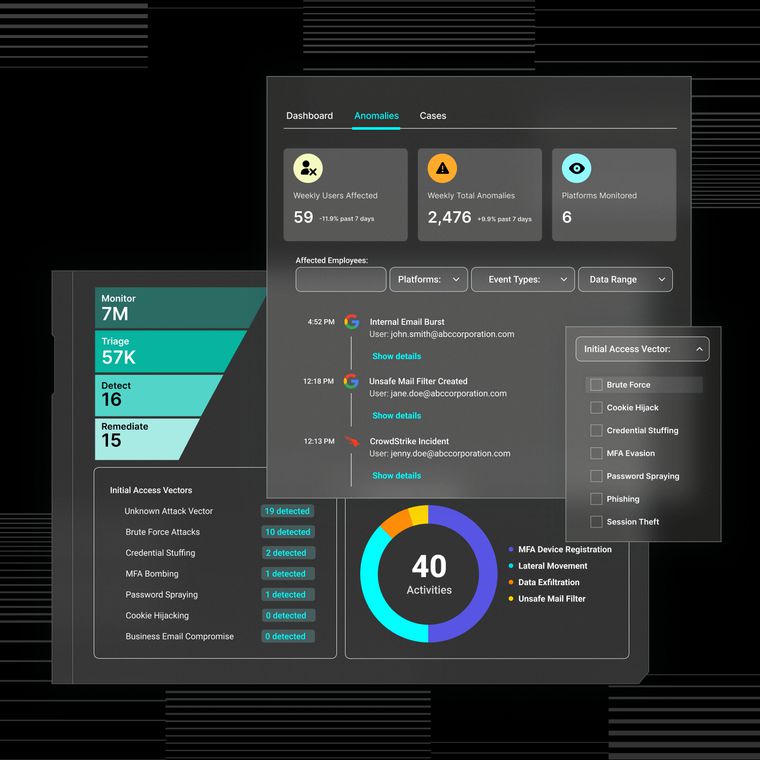

Automation-driven security solutions streamline investigation, response, and remediation—reducing manual workloads, speeding up incident resolution, and improving overall cybersecurity posture.

4. Implement AI-Powered Threat Detection

AI-driven threat detection models analyze behavioral patterns, language nuances, and contextual anomalies to detect the subtle indicators of compromise that secure email gateways (SEGs) and other legacy tools frequently miss.

5. Adopt a Multi-Layered Security Approach

Defending against malicious AI requires a modern security solution designed to secure inboxes and detect attacks across cloud environments. It must also integrate seamlessly with existing security tools, such as endpoint detection and identity protection, for a comprehensive defense.

Reduce Your Vulnerability to AI-Powered Attacks

The barriers to launching sophisticated attacks are rapidly disappearing. Security leaders face a future where trust in digital communication will be constantly tested—and where the speed and creativity of attackers will challenge traditional defenses at every turn. But with awareness, vigilance, and proactive investment in adaptive security measures, defenders can stay ahead of this accelerating threat.

Success will belong to those who recognize that combating malicious AI is not a one-time effort, but an ongoing commitment to innovation, resilience, and continuous learning in a world where the rules are being rewritten in real time.

The attackers have AI on their side. It’s time to make sure you do too.

Download our white paper to discover more of our research on AI exploits, explore real-world examples of AI-powered attacks, and learn predictions for the future of malicious AI.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.