Building a Scalable Cybersecurity Analytics Framework for Your Business

Build a cybersecurity analytics framework that grows with your business and improves threat detection.

July 31, 2025

Timely and accurate intelligence is essential in today’s cybersecurity landscape. Scalable analytics converts raw security data into actionable insights, helping organizations detect and respond to threats before they escalate.

Attackers frequently move between cloud workloads and endpoints, making it crucial to identify suspicious activity across the environment. Threat detection that once took days can now be completed in minutes through the correlation of behavioral anomalies and global threat intelligence. This enables early identification of compromised credentials and unusual patterns.

To keep pace with evolving threats, organizations need an analytics framework that scales with their digital footprint. A well-structured system minimizes alert fatigue and uncovers hidden risks that legacy tools may miss.

The following framework outlines key areas to focus on for effective cybersecurity analytics.

Define Clear Goals and Use Cases

Before investing in tools or building analytics pipelines, organizations must first clarify what they’re solving for. Without defined objectives, even the most advanced analytics can lack direction and fail to deliver value.

A successful security analytics program begins with well-defined goals that align with business priorities. Clear objectives ensure analytics efforts drive measurable outcomes and gain support from executive leadership.

Align Analytics with Business Risk

To gain strategic relevance, frame security goals in terms of business impact. For example, determine whether a ransomware attack could disrupt revenue or if non-compliance could lead to significant fines. Link analytics use cases to tangible risks such as financial loss, reputational harm, intellectual property theft, or regulatory exposure. This approach helps quantify why rapid threat detection matters and clarifies priorities.

For instance, detecting insider threats may take precedence over low-level malware if brand reputation is at stake. Collaborate with finance, legal, and operations leaders to focus on risks the organization values most.

Map Goals to the NIST Cybersecurity Framework

Once risks are identified, translate them into action using the NIST Cybersecurity Framework’s five functions: Identify, Protect, Detect, Respond, and Recover. This structure ensures comprehensive coverage and aligns efforts with industry standards.

For instance, if intellectual property protection is a priority, “Detect” efforts might focus on identifying unusual access to source code, while “Recover” measures how quickly systems can be restored. Aligning risks to framework functions streamlines reporting and clarifies which data sources and analytics rules support each objective.

Defining clear, risk-based goals and linking them to a recognized framework not only enhances analytics effectiveness but also makes outcomes easier to communicate. This alignment ensures the security team focuses on what matters most to the business, and delivers measurable value.

Prioritize High-Value Use Cases

Maximize the value of your security analytics by focusing on high-impact use cases that directly address your organization’s most critical risks. Begin with advanced threat detection to surface behavioral anomalies, implement continuous compliance monitoring to streamline audit readiness, prioritize vulnerability management for intelligent patching, and deploy insider threat detection to identify privilege misuse.

These foundational capabilities offer quick wins by leveraging existing data sources. As your program evolves, expand into targeted scenarios such as supply chain risk monitoring, ensuring that each new initiative delivers measurable risk reduction without introducing unnecessary noise.

Align Stakeholders and Define Success Metrics

Drive success by involving key stakeholders across finance, legal, IT, DevOps, and security. Establish clear success criteria and secure necessary resources by translating technical metrics into business terms. For example, convert detection time into potential revenue exposure and tie patch compliance to audit readiness.

Evaluate your organization’s readiness by mapping critical system dependencies, regulatory requirements, escalation procedures, and visibility gaps. These insights help prioritize investments in analytics capabilities that offer the highest return, creating a roadmap that evolves with emerging threats and business growth.

Establish a Robust Data Foundation

Your analytics program's effectiveness hinges on one critical factor: data quality. Without comprehensive, reliable security telemetry, even the most sophisticated algorithms produce worthless insights. Success demands strategic visibility, rigorous data governance, and infrastructure that evolves with your threat landscape.

Achieve Total Security Visibility

Comprehensive coverage means capturing every security-relevant event across your environment. Your data collection strategy must reveal both normal operations and subtle anomalies that signal compromise.

The core data sources include:

System Logs expose privilege escalations and suspicious file access

Network Flows detect lateral movement that bypasses endpoint controls

Authentication Records track credential misuse and account compromise

Endpoint Telemetry monitors process behavior and system changes

Cloud APIs log configuration modifications and resource access

Threat Intelligence provides context for emerging attack patterns

Security Tool Outputs maintain investigation audit trails

When integrated properly, these diverse data streams create a unified security narrative. Individual alerts become pieces of a larger attack story, enabling analysts to understand attacker methodology and predict next moves.

The goal isn't collecting more data, it's collecting the right data with sufficient fidelity to support accurate, timely threat detection and response.

Data Quality and Processing

Collecting data is only the beginning—accuracy and context are key. Use de-duplication to cut storage costs, standardize formats, and sync timestamps. Enrich events with asset criticality, risk scores, and ownership tags. Score data sources by reliability and track quality continuously. When volumes rise, focus on the highest-value signals.

Architect for Scale and Speed

Support growth with scalable design. Real-time pipelines catch threats fast; data lakes enable deep analysis. Use stream processing for instant anomaly detection and centralized platforms for search and correlation.

Build systems that scale horizontally and connect via APIs. Map data to business-critical assets, fill gaps, and prioritize fixes by impact. Reassess quarterly to stay aligned with evolving risks.

A reliable, scalable data pipeline empowers faster, smarter threat response.

Select Flexible and Scalable Tools

Select platforms that automate detection, apply machine learning, and integrate smoothly with your existing stack. The right tools streamline workflows, scale with demand, and help analysts focus on real threats.

Automation is key and therefore, you need to use tools that enrich alerts and trigger response playbooks without manual effort. Solutions with SOAR capabilities cut triage time and support flexible, customizable workflows.

Machine learning enhances detection by identifying subtle anomalies using UEBA and real-time data correlation. Ensure models update continuously to stay effective against evolving threats.

Integration and Scalability

Effective integration expands your visibility. Choose platforms with APIs and built-in connectors to pull data from cloud apps, endpoints, and networks, while sending actions to firewalls, identity systems, and ticketing tools. Broad integration speeds deployment and ensures end-to-end threat detection.

Scalability is key as data grows. For instance, cloud-native tools scale automatically, while on-prem solutions require careful planning but meet data residency needs. Hybrid models offer flexibility, storing sensitive data locally while handling peak loads in the cloud.

Usability and Vendor Fit

Analyst adoption depends on ease of use. Look for dashboards that turn data into clear insights and workbenches that link logs to context. Also, make it a point to always test with real data.

When comparing tools, assess support, customization, and total cost, including storage and operations. Match your top use cases to each vendor’s strengths to choose a solution that can grow with your needs.

Develop Skilled Teams and Processes

Strong teams with clear processes turn raw data into fast, effective decisions. Invest in targeted training, standardized workflows, and performance metrics to support continuous improvement. Train analysts using NIST-aligned modules to connect daily tasks to business risk reduction. Go beyond certifications, teach behavioral analytics, SIEM/SOAR tuning, and machine learning basics. Additionally, make it a point to strengthen threat intel and reporting skills. For advanced teams, use UEBA and mentoring programs to build expertise and avoid knowledge gaps.

Process Development and Collaboration

Training matters, but clear workflows close incidents. Link analytics alerts to playbooks with steps for triage, containment, and post-incident review. Keep playbooks updated through ongoing risk assessments. Automate routine tasks with SOAR to free analysts for deeper investigations.

Collaboration boosts impact. Coordinate hand-offs between threat, IT, and compliance teams. Document decisions in ticketing systems to improve future detection and response.

Track Performance and Improve Continuously

Use KPIs like mean time to detect/respond, false-positive rate, and analyst workload to measure effectiveness. Review weekly, spot gaps, and adjust training or staffing as needed. Combining training, automation, and metrics gives your team the agility to stay ahead of evolving threats.

Continuously Measure and Improve

Measurement turns your security analytics into an adaptive defense system. Focus on tracking the right metrics, validating control effectiveness, and driving consistent improvements.

Here are some steps that you can take:

Monitor Detection and Response Speed: Speed shows effectiveness. Track metrics like mean time to detect, mean time to respond, and incident resolution rates. Review weekly dashboards to catch performance dips early, delays often signal issues with log coverage, alert quality, or staffing.

Measure Risk Exposure and Remediation: Detection alone isn't enough, unpatched vulnerabilities still pose risk. Monitor KPIs like vulnerability detection, patching rates, and mean time to patch. Pull data from ticketing and config systems. Escalate delays that exceed SLAs to risk councils to prevent backlog growth.

Validate User and Control Performance: Assess both human and technical layers. Track training completion, phishing simulation click rates, false positives, and control coverage. High click or false positive rates drain analyst time and weaken defense. Use these insights to refine training, tune alerts, and remove noisy rules.

Continuous measurement isn’t just about oversight. It’s about making your program smarter and more resilient over time. By regularly assessing speed, exposure, and control effectiveness, you empower your team to stay ahead of threats while aligning with business goals.

Close the Loop with Continuous Improvement

Measurement alone isn’t enough as real value comes from acting on the insights. Establish a structured improvement cycle that links KPIs to each framework function, automates data collection via SIEM queries and dashboards, and reviews trends in monthly operations meetings using the latest threat intelligence.

Use these insights to refine correlation rules, tune machine learning models, and reassess outcomes through continuous threat exposure testing. This ensures that improvements stick and remain effective over time.

Integrate this process into daily workflows so your analytics program evolves alongside emerging threats turning measurement into a driver of real security gains, not just unused reports.

Future-Proof Your Security with Scalable Analytics

Building a scalable cybersecurity analytics framework is more than a technical initiative. It’s a critical move toward proactive, resilient defense. By aligning analytics with business risk, integrating quality data sources, deploying flexible tools, and empowering skilled teams, your organization can turn raw telemetry into real-time, actionable insight.

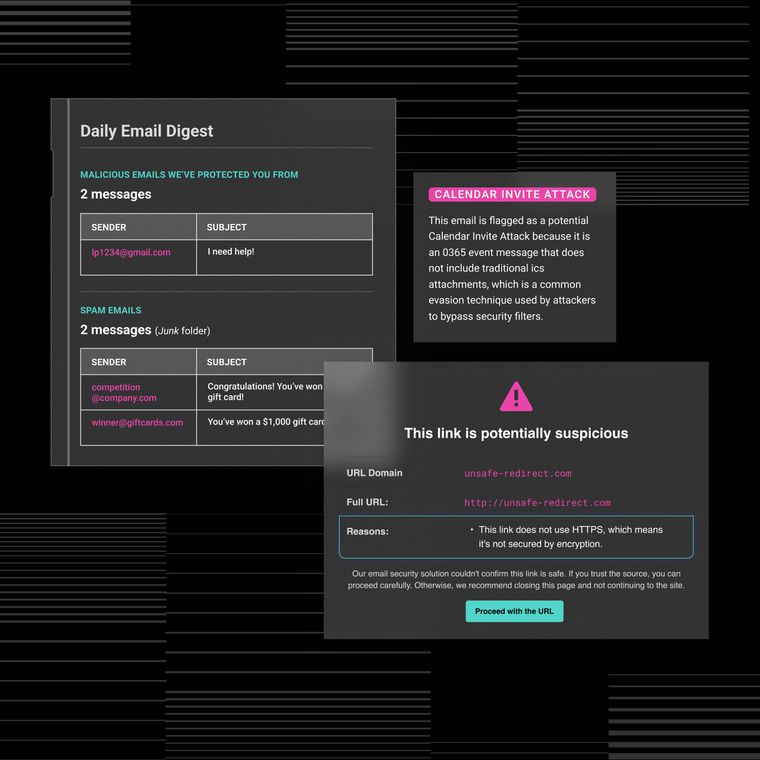

But no framework is complete without addressing the human layer, the inbox, where most threats begin. Abnormal strengthens your analytics strategy with behavioral AI that analyzes identity, intent, and context to detect the subtle anomalies traditional tools miss. It integrates seamlessly into your existing ecosystem, providing high-fidelity detection and automated remediation that reduce noise and accelerate response.

Enhance your analytics stack with AI-powered email security that evolves with your threat landscape. Book a demo to see how Abnormal helps you close the human security gap and future-proof your defense.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.