Managing Unstructured Data Risks: 10 Practices for Cybersecurity Professionals

Use these best practices to manage unstructured data risks across your environment.

July 20, 2025

Unstructured data, such as emails, design files, medical images, and video content, represents the bulk of organizational information yet remains largely invisible to traditional security tools. This creates a massive attack surface where personal information and corporate IP become prime targets for adversaries. Without fixed schemas, this data sprawls across decentralized repositories, making each phishing attack a potential gateway to treasure troves of unmanaged information.

Organizations face compounding security, compliance, and operational risks as ransomware targets proliferate, regulatory obligations become increasingly difficult to track, and storage costs escalate.

Proactive governance that discovers, restricts, and monitors data movement is essential for protection without hindering collaboration. The following 10 practices provide cybersecurity professionals with a concrete framework for mitigating these risks associated with unstructured data.

1. Discover and Classify Unstructured Data

You cannot defend what you cannot see. Automated discovery platforms scan across cloud drives, on-premises shares, email stores, and collaboration tools, surfacing hidden files in minutes. Tools such as an unstructured data discovery platform or AI-driven discovery engines connect through APIs and agents to create a real-time inventory regardless of where information resides.

Modern classifiers use natural language processing, pattern recognition, and context awareness to tag sensitive elements. These systems identify credit card numbers and financial records, health information and personal details, source code and intellectual property, as well as regulated content that requires specific handling.

Also, create a simple, four-tier taxonomy such as public, internal, confidential, and restricted, so that the labels are intuitive for employees and readable by downstream controls. Automatically apply metadata and sensitivity tags, enabling DLP or CASB policies to trigger without manual intervention.

Next, prioritize high-risk repositories, such as legacy file shares and executive inboxes, first. Early wins in these areas prove value and free up resources for broader coverage. This foundation enables effective mapping of where your most sensitive information actually lives across your environment.

2. Map Where Unstructured Data Lives

A comprehensive inventory reveals how files travel through your environment and where they accumulate outside your security controls. Here are the steps you need to take in this case:

Catalog every repository, endpoint, network share, email server, cloud bucket, and collaboration platforms like Slack, Teams, and SharePoint. Include personal devices and unsanctioned cloud drives where employees routinely store project files.

Use discovery scans to surface shadow IT locations that bypass your security controls. Create flow diagrams that show how files are transferred between systems, contractors, and partners. This visibility enables you to tier repositories by business criticality and apply stricter controls to the highest-risk stores.

Maintain current documentation that auditors require during breach investigations or regulatory inquiries. Without accurate mapping, you cannot effectively protect or respond to incidents involving sensitive files.

With clear visibility into your information landscape, you can begin reducing exposure through strategic minimization.

3. Implement Data Minimization Policies

Information minimization reduces risk by collecting and retaining only the files your organization truly needs. Here’s how you can get started:

Ground your policy in core principles: purpose limitation, necessity, and storage limitation. Translate those concepts into concrete timelines that teams can follow. Organizations should define retention schedules for email, project documents, and chat logs based on legal, regulatory, and business requirements. This ensures that policies account for legal holds and industry-specific obligations, while publishing schedules and documenting exceptions for regulators.

Manually finding redundant, obsolete, or trivial files is impossible at an enterprise scale. Deploy classification tools that flag duplicates, stale versions, and low-value content, then trigger scripted deletion or low-cost archival. Platforms that combine deduplication, pattern matching, and rule-based purges enable weekly "digital shredding" jobs while logging every action for auditors, as outlined in this step-by-step framework.

When information must be preserved for analytics, replace direct identifiers with tokens or pseudonyms to maintain confidentiality. Proper pseudonymization maintains business insight without exposing personal information, a best practice reinforced by privacy experts at Piiano. The result is leaner storage bills, smaller breach blast radius, and faster recovery when incidents strike.

Effective minimization works hand in hand with strict access controls to limit who can access remaining sensitive files.

4. Control Access With Least Privilege Principles

Least privilege limits exposure by granting users only the access required for their specific roles. Start with a comprehensive permissions audit across shared drives, mailboxes, and collaboration platforms, then remove any unnecessary rights.

Role-based access control maintains this structure, sales teams may receive read access to account folders, legal staff are assigned access to archive documents as required by their roles, and no user gets default owner privileges.

On cloud platforms, scan continuously for oversharing through "anyone" links and replace them with named-user shares that expire automatically. For highly sensitive documents, implement just-in-time access that closes automatically when tasks are completed.

Modern governance tools identify over-permissioned accounts in real time and trigger automated workflows to revoke or downgrade access before these become breach pathways.

Once access controls are in place, continuous monitoring ensures they remain effective against evolving threats.

5. Monitor and Audit File Activity

Continuous monitoring of file activities provides the visibility needed to detect potential information theft, insider threats, and unauthorized access before damage occurs. That’s why you need to stream every access attempt, download, share, edit, and bulk deletion into a central log for comprehensive oversight.

Deploy behavior account analytics that establish normal usage patterns for each user and repository, moving beyond simple rule-based detection to identify subtle anomalies that pattern-matching alone misses.

When behavioral models flag outliers, such as midnight exports of hundreds of client files or unusual bulk downloads, immediate alerts enable rapid response to cut off suspicious sessions.

Configure real-time notifications for high-risk activities like sensitive information being shared externally or mass file deletions that could indicate ransomware activity. This transforms routine variance into actionable intelligence when attackers, insiders, or misconfigured scripts begin exfiltrating information at abnormal rates.

Next, maintain immutable audit logs to preserve forensic evidence and satisfy compliance requirements. Regular audits and monitoring serve as mandatory controls for regulatory frameworks. Establish baseline activity volumes for each storage location to transform routine variance into actionable intelligence when attackers, insiders, or misconfigured scripts begin exfiltrating information at abnormal rates.

Monitoring reveals threats, but encryption ensures stolen files remain unreadable.

6. Encrypt Data at Rest and in Transit

Encryption renders files unreadable to attackers. Deploy multiple protection layers across all file locations, endpoints, servers, cloud, and SaaS platforms.

Implement full-disk encryption on endpoints, file-level encryption for shared repositories, and use AES-256 and TLS 1.3 standards. Extend to mobile devices through MDM. For SaaS platforms, employ bring-your-own-key options when available.

Effective key management is critical: centralize keys in HSMs or cloud KMS, enforce annual rotation, and implement strict role-based access to separate encrypted data from its keys. Log all key operations.

Balance security with usability through format-preserving encryption that allows authorized searches without full decryption, keeping data protected during audits while remaining accessible to compliance teams.

7. Train Employees on Unstructured Data Risks

Employee behavior often decides whether sensitive information remains secure or becomes a breach headline. You need a program that targets risky habits, builds situational awareness, and proves its impact.

Follow these steps to get started:

Start by showing employees how their everyday actions expose confidential content. For instance, files live on endpoints, email threads, and collaboration tools that are easy to overshare or misplace.

When staff store project folders on personal drives or unauthorized cloud storage, they create shadow IT blind spots that expand your attack surface. Dedicated modules should cover selecting the right repository, setting granular sharing permissions, and recognizing sensitive patterns.

Illustrate consequences with real incidents, from classic Nigerian Prince scams to modern business email compromise. Showcase how attackers now automate phishing content with tools, increasing both volume and believability.

Tailor sessions to roles. Your legal team needs deeper guidance on attorney such as client material, while developers must secure source code. Remember, practical, role-based scenarios turn abstract policy into remembered action.

Shift to microlearning with five-minute refreshers that remind staff how "anyone with the link" sharing leaves content public. Deliver tips at the point of use, such as how a prompt inside your cloud drive when someone tries to export a customer list.

Reinforce lessons with positive metrics by tracking the drop in externally exposed folders, the percentage of files correctly labeled, and incident response time for accidental leaks. Pair these indicators with frequent pulse surveys to gauge confidence.

When you can show fewer policy violations and smaller storage footprints quarter over quarter, training proves its value.

8. Integrate Unstructured Data Into Incident Response Plans

Your incident response plan must specifically address file-based risks—document leaks, mis-shared folders, and ransomware on file shares—because traditional playbooks ignore these messy realities.

Ransomware crews increasingly use double-extortion tactics that encrypt and exfiltrate files such as legal agreements or design specs, turning every shared drive into a liability and forcing rapid, multi-team coordination for containment and negotiation.

Map critical repositories and link each one to clear isolation steps, including revoking public links, disabling sync jobs, and snapshotting affected volumes for forensic imaging. Maintain a live inventory so you can immediately identify which files, owners, and third parties are exposed—vital for meeting tight breach-notification deadlines highlighted by privacy counsel.

Pre-approved notification templates, evidence-preservation checklists, and hashed backup copies ensure you maintain chain of custody while restoring operations quickly and defensibly.

Effective incident response requires alignment with broader regulatory requirements that govern the handling of sensitive information.

9. Align With Regulatory Frameworks

Files fall under direct regulatory scrutiny, requiring a repeatable framework that demonstrates control over every document across your organization. Tag repositories against key mandates, such as GDPR, CCPA, and HIPAA, to fulfill individual rights, including erasure, portability, and breach notification, without scrambling.

Email threads and shared drive folders often contain personal information; under GDPR's "right to be forgotten," this content must be located and deleted on request, a task that proves nearly impossible without precise classification and retention rules. Also, document the safeguards you apply, such as access controls, encryption, retention timers, and store this evidence for auditors.

Additionally, automate policy checks that flag files lacking appropriate labels or exceeding statutory retention windows to maintain continuous compliance. Record the location of each dataset; many jurisdictions restrict cross-border transfers, so maintaining a granular inventory of storage locations streamlines both approvals and incident response.

Regulatory alignment works best when supported by dedicated technology solutions that enforce policies automatically.

10. Leverage DLP and CASB Solutions

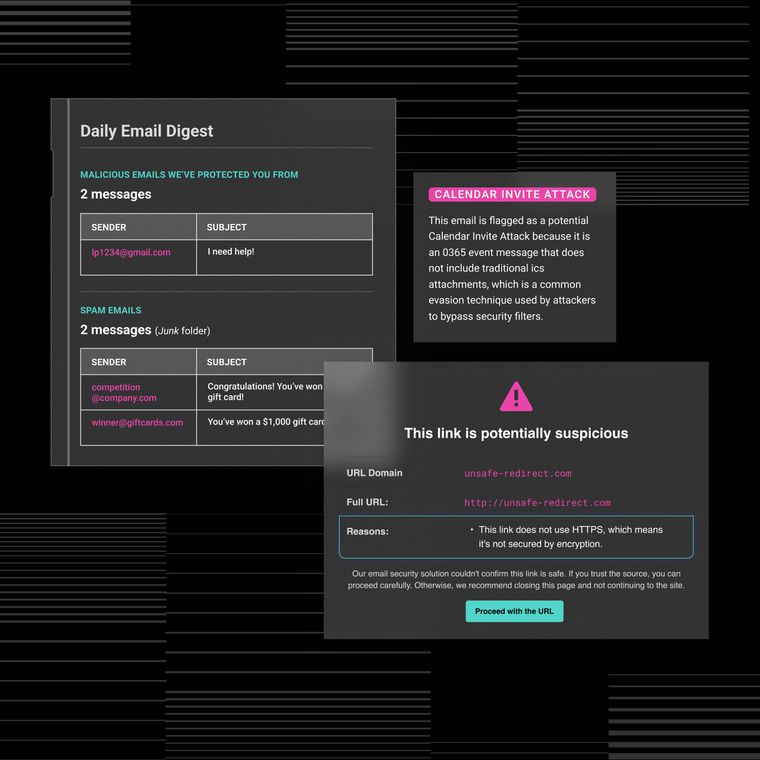

DLP and CASB solutions enforce policies to prevent data exfiltration. DLP engines discover, classify, and quarantine sensitive content before it exits the network, while CASBs extend protection to cloud environments by enforcing sharing rules and encrypting documents.

Email security gateways scan attachments and links before delivery, with properly configured MX records ensuring secure flow. Compare CASB capabilities with email security platforms. Next, integrate all controls with SIEM and EDR/XDR systems for unified incident management. After deployment, baseline normal activity and iteratively refine policies, combining continuous tuning with AI-driven inspection to maintain protection without workflow disruption.

File Protection Demands Ongoing Vigilance

Embed these ten practices into daily operations through regular audits and adjustments. As threats evolve, regulations change, and processes shift, your protection strategy must adapt accordingly.

Success requires collaboration: security teams establish guardrails, IT implements controls, compliance maintains audit trails, and business units provide context about the criticality of data. When everyone shares ownership, gaps are quickly identified and remedied before they become incidents.

Also, regular cross-functional reviews ensure controls remain effective as your information landscape evolves. Finally, treat files as first-class citizens in your security strategy. Schedule quarterly assessments, assign clear ownership, and measure progress through specific metrics rather than compliance checkboxes.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.