Top Cybersecurity for Higher Ed Vulnerabilities AI Can Address

AI can help address top cybersecurity vulnerabilities in higher ed institutions, improving defenses against emerging threats.

October 21, 2025

Higher education institutions face unique cybersecurity challenges that set them apart from other sectors. Academic environments must protect diverse user populations and maintain open collaboration networks while managing constrained budgets. Traditional corporate security models fail in these settings because they assume standardized communication patterns and access controls that clash with academic freedom.

Education ranked as the fourth-most-targeted sector during the first half of 2025, making robust defenses critical. However, conventional approaches struggle to accommodate the varying technical competencies across students, faculty, and staff. Higher education institutions need security solutions that balance protection with accessibility while adapting to constantly changing user populations and collaboration requirements.

AI-powered behavioral analysis addresses these gaps by learning normal patterns within diverse academic communities and detecting threats that traditional tools miss.

Compromised Faculty Accounts Fly Under Security Radar

Faculty email accounts attract targeted attacks because threat actors weaponize institutional trust rather than exploiting technical vulnerabilities. When attackers breach faculty credentials through authentication system exploitation and MFA bypass methods, they establish persistent access with elevated privileges that enable network lateral movement and research database access.

Attackers deploy spoofed communications redirecting victims to counterfeit login portals, replicate institutional MFA configurations, and exploit hierarchical trust relationships for lateral movement. Security platforms automatically trusting authenticated university domains create blind spots where compromised credentials operate undetected.

Behavioral analysis maps baseline communication patterns for individual faculty members. When accounts exhibit unusual behaviors, access atypical systems, or communicate with unexpected domains, anomalous activity triggers investigation protocols that authentication-based defenses routinely miss.

Academic Language Provides Perfect Phishing Cover

Attackers weaponize academic communication patterns to evade conventional detection systems. University communications follow predictable structures including formal institutional language, hierarchical trust relationships, and direct messaging that bypasses traditional spam filters. Threat actors deploy AI-generated content replicating university communication styles that signature-based detection cannot identify.

Impersonation attacks target department heads, IT administrators, and financial aid offices using professional tones matching academic norms. Attackers create fake login portals resembling official university websites that complement email campaigns exploiting institutional trust.

Context analysis examines communication patterns beyond content scanning. AI systems detect anomalies in sender relationships, message timing, and institutional communication flows indicating phishing attempts, even when content precisely matches legitimate university correspondence.

Educational Platforms Become Attack Infrastructure

Higher education institutions rely on educational platforms including forms, HR systems, and learning management tools that attackers exploit to inherit domain reputation and institutional trust. Threat intelligence documents malicious forms on compromised school domains collecting credentials through methods bypassing traditional email security filters.

Compromised accounts enable payroll redirection attempts and lateral movement across institutional networks. Attackers systematically target university HR software to redirect employee paychecks while maintaining persistent access to expand operations.

Pattern recognition separates legitimate tool use from credential theft by analyzing user behavior across educational platforms. AI systems detect when compromised accounts create unusual forms, access administrative functions outside normal patterns, or generate activity inconsistent with legitimate educational purposes.

Diverse Campus Populations Create Security Blind Spots

Universities manage security across populations with vastly different technical competencies. Traditional one-size-fits-all security approaches create gaps by assuming uniform user behavior across undergraduate students, graduate researchers, faculty, staff, and external collaborators. When security systems treat all users identically, they miss threats targeting specific segments or fail accounting for legitimate behavioral variations.

Faculty conducting international research exhibit different communication patterns than undergraduate students managing coursework. Graduate researchers accessing laboratory equipment create different network traffic than administrative staff processing records.

Personalized detection adapts to individual communication patterns by learning normal behavior for different user types. AI systems recognize faculty research collaboration differs from student course communication, enabling threat detection accounting for legitimate behavioral diversity while identifying security anomalies.

Academic Openness Conflicts with Security Lockdowns

Research collaboration requires information sharing, network accessibility, and international partnerships conflicting with traditional security lockdown measures. Universities maintain complex interdependencies across multi-institutional networks while supporting experimental software in research environments. Heavy-handed security controls disrupt legitimate academic work, creating pressure to bypass security measures or maintain overly permissive access policies.

Cybersecurity challenges stem from multi-institutional collaboration networks, complex identity management for external researchers, and laboratory IoT devices prioritizing functionality over security controls. Grant-funded projects utilize specialized software with varying security profiles while research deadlines create pressure maintaining system accessibility.

Context-aware protection maintains openness while detecting threats by understanding legitimate academic workflows. AI systems distinguish between normal research collaboration patterns and malicious lateral movement attempts.

Traditional Detection Cannot Keep Pace with Innovation

Universities face constantly evolving attack methods targeting research networks with experimental software configurations. Signature-based systems cannot detect unknown threats because they rely on established patterns, creating vulnerability windows where research activities operate beyond comprehensive monitoring.

Modern AI-powered attacks present novel evasion techniques circumventing rule-based detection. Research data exfiltration may appear identical to legitimate academic collaboration. Universities support cutting-edge research involving emerging technologies where academic collaborations with experimental applications become exploitable before detection signatures develop.

Real-time anomaly detection catches never-before-seen attack patterns by identifying behavioral deviations rather than matching known signatures. AI systems detect zero-day threats by recognizing unusual network traffic, atypical system access patterns, or anomalous data movement indicating compromise.

Security Tools Miss University-Specific Rhythms

Generic security solutions miss academic rhythms including registration periods, research deadlines, and administrative cycles creating predictable vulnerability windows. Attackers exploit university-specific timing such as payroll processing periods and academic calendar transitions to maximize attack effectiveness during these high-activity intervals.

Traditional security tools lack context distinguishing legitimate academic communication from sophisticated impersonation attacks targeting university processes. Threat intelligence documents payroll redirection campaigns targeting university employees during processing periods, leveraging predictable administrative timing.

Academic-trained models recognize legitimate versus suspicious university communications by learning institutional rhythms and academic-specific patterns. AI systems understand when high-volume email activity represents legitimate registration periods versus coordinated phishing campaigns, reducing operational friction while improving detection accuracy.

How Abnormal Addresses Higher Education Security Challenges

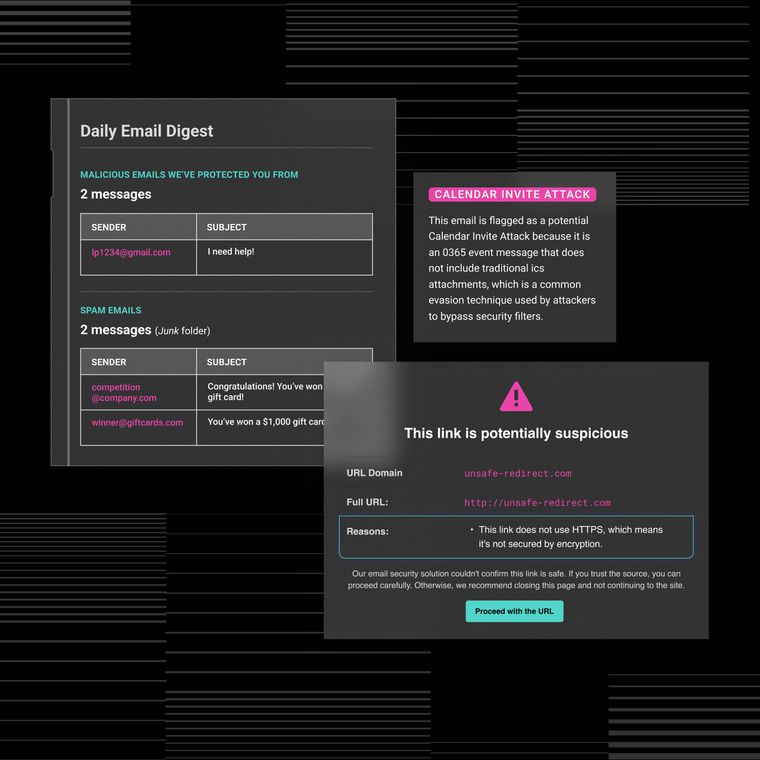

Abnormal's behavioral AI platform addresses university cybersecurity challenges through rapid deployment integrating seamlessly with academic email systems. The platform combines behavioral analysis learning normal communication patterns, identity validation for sender authenticity, and content scanning analyzing messages for advanced threats targeting universities.

The platform demonstrates effectiveness against attack vectors including advanced phishing campaigns, social engineering attacks leveraging institutional trust relationships, and account takeover prevention in shared resource environments. Higher education institutions face large email attack surfaces spanning staff, faculty, students, alumni, vendors, and partner institutions.

Behavioral AI quickly learns recognizing anomalies in messages to immediately detect and remediate threats targeting academic environments. The platform integrates with existing campus IT infrastructure, providing audit-ready compliance reporting essential for FERPA requirements while maintaining operational flexibility.

Want to learn how Abnormal can protect your institution against sophisticated university-targeted attacks? Request a demo to discover how behavioral AI addresses the unique cybersecurity challenges facing higher education.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.