How AI Is Transforming Cybersecurity and What to Do About It

Explore the latest AI-driven cyber threats and practical defenses powered by behavioral AI to keep your organization secure.

July 4, 2025

Artificial intelligence (AI) has changed the way cyberattacks unfold. Threat actors now use it to launch faster, smarter, and more convincing attacks. At the same time, defenders rely on AI to detect and stop threats faster than any human team could. The pace of both attack and defense has accelerated, and the stakes have never been higher.

A 2023 survey of 300 global leaders found that 98% of them are worried about the cybersecurity risks of AI. Their concerns are well-founded. Cybercriminals now use AI tools to automate phishing, craft believable messages, and generate deepfake audio and video to impersonate trusted individuals.

These AI-generated scams are more convincing and more likely to succeed. Large language models (LLMs) can generate tailored phishing emails in seconds. Deepfakes clone executive voices to authorize fraudulent transactions. Machine learning (ML) models are helping malware evade detection, even if those methods haven’t yet been incorporated into mainstream attacks.

As such, traditional defenses struggle to keep up. As attackers adopt tools that learn and adapt, security must evolve just as quickly. Defenders need systems that analyze behavior, automate response, and reduce reliance on manual intervention.

This guide examines ten of the most pressing AI-driven threats and the practical defenses organizations can implement immediately. From behavioral AI to zero-trust frameworks and automated responses, these strategies help predict, prevent, and contain the risks of AI-powered attacks before they cause lasting damage to your business, data, or compliance posture.

1. Deepfake-Enhanced Business Email Compromise (BEC)

AI-generated deepfakes now enable criminals to impersonate executives with unprecedented accuracy, thereby bypassing traditional email security through the creation of convincing video and voice clones.

Deepfake technology creates realistic impersonations of company leadership, tricking finance teams into making unauthorized wire transfers or sharing credentials. These attacks exploit familiar communication channels and established trust relationships. Traditional email security gateways cannot detect these sophisticated impersonations because they appear legitimate at the surface level.

Deploy behavior-based authentication systems that learn normal communication patterns and flag deviations. Establish mandatory out-of-band verification for all high-value financial requests, regardless of apparent source. Train staff to recognize subtle inconsistencies in executive communication styles.

The bottom line? Set up multi-channel verification protocols and behavioral analysis systems now. When deepfakes can perfectly mimic your CEO, trust becomes a liability; therefore, you need to verify everything.

2. Automated Malware and Ransomware Creation

AI tools now generate unique malware variants faster than signature-based defenses can adapt, requiring behavior-focused detection rather than code recognition.

Criminal AI platforms create custom malware for each target, rendering hash-based detection useless. Each attack uses unique code that evades traditional antivirus signatures. Automated systems generate convincing phishing emails and sophisticated ransomware variants, accelerating attack timelines significantly.

Focus on detecting malicious behaviors rather than known signatures. Deploy advanced sandboxing that analyzes file actions before execution. Maintain immutable backups isolated from production networks and regularly test recovery procedures.

The key is shifting to behavior-based detection and strengthening your backup resilience. When malware evolves faster than signatures, you need to monitor its behavior, not its appearance.

3. Adversarial ML - Model Poisoning, Theft, and Evasion

Attackers now target AI systems directly, poisoning training data, stealing models, and crafting inputs that fool AI defenses while executing malicious actions.

Machine learning systems face sophisticated attacks, including data poisoning that skews results, model theft through API manipulation, and adversarial inputs that appear benign but trigger malicious behaviors. These attacks compromise the AI systems that organizations increasingly rely on for security decisions.

Secure ML pipelines with data validation controls and differential privacy techniques. Monitor model performance for any unexpected changes that may indicate a compromise. Apply rigorous security controls to AI infrastructure equivalent to production code standards.

Start by validating the integrity of your training data and continuously monitoring model performance. Remember, your AI defenses can become weapons against you if they're not properly secured.

4. Autonomous Attack Bots and Reconnaissance

AI-powered attack bots continuously probe systems and adapt to defenses in real-time, requiring equally automated defensive responses.

Autonomous attack systems map vulnerabilities, test credentials, and pivot through networks faster than human analysts can respond. These systems learn from defensive countermeasures and adjust their tactics dynamically, significantly reducing dwell time and increasing success rates.

Implement continuous attack surface monitoring with automated threat intelligence updates. Deploy network micro-segmentation and zero-trust principles to contain lateral movement. Establish automated response protocols that isolate compromised systems promptly and effectively.

You need to match automation with automation through continuous monitoring and automated response systems. The hard truth is that when attacks operate at machine speed, human-paced defenses will inevitably fall short.

5. Supply Chain and Vendor Email Attacks

AI enables the creation of perfect impersonations of trusted vendors by analyzing past communications and generating contextually appropriate fraud requests.

Attackers compromise vendor email accounts and use AI to analyze communication history, creating perfectly crafted payment change requests that match established patterns. These attacks succeed because they leverage existing trust relationships and appear completely normal to recipients.

Track vendor communication patterns and establish baseline behaviors for each relationship. Deploy AI detection systems that identify subtle linguistic deviations. Implement dual-approval workflows for all payment detail modifications with multi-channel verification requirements.

Focus on establishing vendor communication baselines and enforcing dual-verification for payment changes. When AI can perfectly mimic your vendors, verification becomes your only defense.

How to Defend Your Organization From AI-Enabled Cyberattacks

As AI systems become increasingly capable, threat actors are leveraging AI to launch faster, more personalized, and more convincing attacks. That’s why organizations must adopt a proactive and comprehensive approach to managing AI risks. Here’s how to strengthen your defenses:

Audit AI Systems Regularly

Before deploying any AI-based tool, conduct a thorough security assessment to ensure its integrity. Ensure the AI system aligns with your organization’s privacy and compliance standards. Ongoing audits help identify vulnerabilities and reduce exposure to adversarial attacks or misuse. Work with cybersecurity experts who specialize in AI to perform penetration testing, review training data integrity, and validate threat detection performance.

Avoid Sharing Sensitive Information with AI Tools

Many users unknowingly enter confidential or regulated data into generative AI models. This includes proprietary business details and personally identifiable information. Although AI systems may not disclose data outright, interactions are often retained for model improvement or maintenance purposes. Train employees to avoid sharing sensitive information with AI, especially when using public tools.

Protect Data Used by AI Systems

The effectiveness of AI systems depends on the quality and security of the data used for training. If attackers manipulate this data through a tactic known as data poisoning, the results can be misleading or even dangerous. Strengthen your defenses with encryption, access controls, and network segmentation to enhance security. Use advanced threat detection and intrusion prevention tools to protect against tampering.

Keep Software and AI Tools Updated

Outdated software leaves systems vulnerable to AI-powered exploits. Maintain all platforms, including AI frameworks, applications, and security systems, with the latest patches. Use real-time monitoring tools, endpoint detection and response (EDR), and next-gen antivirus to flag suspicious activity and block sophisticated attacks.

Implement Adversarial Training for AI Models

Train AI models using adversarial techniques to increase resilience. Exposing systems to various simulated attack scenarios enables them to identify patterns of manipulation better and detect emerging threats. This strengthens model behavior against unpredictable or intentionally deceptive inputs.

Train Your Security Team and Employees

Phishing attacks created by AI models can appear highly credible. Equip your workforce with AI-specific security awareness training. Teach them how to flag suspicious emails, avoid clicking unknown links, and recognize AI-generated threats. A well-informed team reduces the risk of social engineering and malware infiltration.

Adopt AI Vulnerability Management

AI systems present unique weaknesses that traditional vulnerability management may miss. Use an AI-specific approach that identifies, classifies, and mitigates flaws in models, APIs, and integration points. This minimizes your exposure to emerging attack vectors driven by AI innovation.

Establish an AI Incident Response Plan

Despite the best precautions, incidents may still occur. Develop a detailed AI incident response strategy that includes real-time threat containment, root cause analysis, and remediation. Involving legal, compliance, and IT stakeholders ensures alignment across your organization.

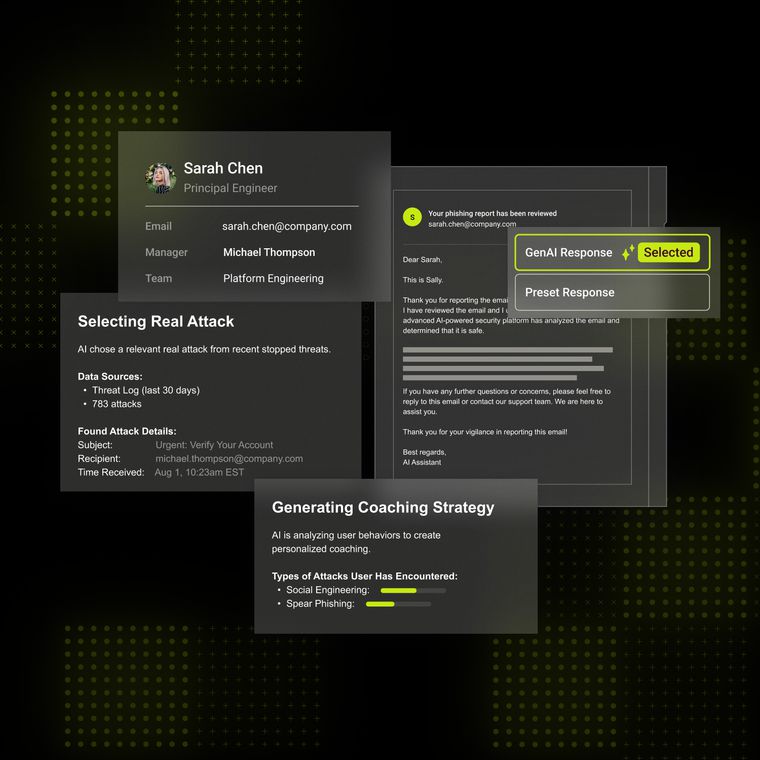

Adapting Security Strategies for the Age of AI-Powered Threats

The security leaders of tomorrow are investing in behavioral analytics and AI-native security platforms that adapt to evolving threats in real-time. Those relying on legacy solutions will face increasing vulnerability to the new generation of AI-powered attacks. Book a demo with Abnormal to see how behavioral AI can help protect your organization against these emerging threats.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.