What is The Psychology Behind Social Engineering Email Attacks

Learn how attackers exploit human behavior in social engineering email attacks and what steps you can take to defend against them.

August 18, 2025

Cybercriminals stole over $16 billion from organizations and individuals in 2024, representing a staggering 33% increase from the previous year. Yet most of these attacks didn't succeed through sophisticated hacking techniques or advanced malware. Instead, they exploited something far more fundamental: the human psychology.

Consider the recent Qantas data breach of July 2025, where attackers accessed six million customers' personal information through a third-party platform. While the technical details matter, the real story lies in how these criminals manipulated human behavior to gain initial access. They understood that behind every security system sits a person who can be influenced, pressured, or deceived.

That said, social engineering email attacks represent the evolution of cybercrime from purely technical exploits to psychological manipulation. Attackers now study human behavior as carefully as they once studied code vulnerabilities. They craft messages that trigger our natural responses to authority, create artificial urgency that bypasses critical thinking, and exploit our inherent trust in familiar communications. Understanding how these psychological tactics work is the first step in building defenses that protect both technology and the people who use it.

Understanding the Attacker's Playbook

Attackers turn publicly available information into highly targeted emails, making reconnaissance a major threat. Their approach follows predictable steps that combine information gathering with psychological manipulation.

Map the Terrain with Open-Source Intelligence (OSINT)

Reconnaissance often starts with LinkedIn profiles, conference bios, and press releases. This helps attackers learn who approves invoices, which vendors you use, and when quarters end. Research shows they mix this open-source intelligence with psychological triggers like scarcity and authority to create requests that feel familiar and lower defenses.

Build Plausible Personas and Hijack Threads

With this context, attackers may register look-alike domains or compromise real accounts to join ongoing conversations. Thread hijacking works because messages appear within trusted email chains. Representativeness bias makes people accept anything that matches established patterns.

Target the People Who Move Money

Finance teams, HR staff, and executive assistants are prime targets because they handle wire transfers, payroll, and urgent bookings. Attackers combine OSINT data with urgent, fear-based messages to push for quick approvals. Authority bias increases the success rate when messages appear to come from senior leaders, which is why CFO impersonations remain profitable.

Well-prepared scams succeed because every detail seems normal. Understanding these methods shows how attackers use psychological triggers to exploit human trust.

How Attackers Make Their Emails Look Legitimate

Attackers use refined tactics to make malicious emails appear authentic and bypass traditional defenses. Plain-text emails often evade legacy filters because they lack HTML formatting and complex structures. Free domains can be configured to pass SPF and DKIM authentication, making messages seem to come from trusted sources.

Another common tactic is reply-chain hijacking, where attackers insert themselves into an existing email conversation. By mimicking the sender’s tone, maintaining thread context, and matching local working hours, they create a convincing illusion of legitimacy.

These methods exploit weaknesses in rule-based security systems. Behavioral AI, which detects tone, timing, and contextual mismatches, is critical for identifying such deception. Without advanced detection, even well-trained employees may fail to spot technical manipulation in ordinary-looking messages. The sophistication of these tactics makes them especially dangerous in high-value business communications, where a single convincing email can result in significant financial or data loss.

Cognitive Biases That Enable Successful Attacks

High-conversion phishing emails succeed because they tap into predictable cognitive biases that operate below conscious awareness. By understanding these mental shortcuts, you can create the pause needed to verify requests before acting.

Let’s understand the main cognitive biases that are exploited by the attackers:

Authority Bias

People instinctively comply with requests from perceived superiors. In cybersecurity, attackers exploit this by spoofing executives or senior managers, making requests for wire transfers, login credentials, or sensitive documents. Even well-trained employees may override verification protocols when a message appears to come from the CEO or CFO, believing the consequences of delay outweigh the need for scrutiny.

Urgency Pressure

Deadlines such as “respond by noon” or “approve within the hour” create stress that suppresses analytical thinking. In phishing scenarios, urgency is often layered onto authority bias to force immediate action, leaving no time for verification. This manipulation leads to rushed approvals, quick clicks on malicious links, or hasty data sharing before technical defenses can intervene.

Familiarity Trust

Attackers use hijacked vendor accounts or look-alike domains to replicate real communication patterns. By mirroring standard email formats, tone, and timing, they bypass suspicion and make malicious requests feel routine. In cybersecurity incidents, this tactic allows fraudulent invoices or fake contract updates to slip past both human and technical filters because they appear identical to past legitimate exchanges.

Social Obligation

Humans have an ingrained tendency to help, especially when asked politely by someone in a position of authority. Cybercriminals exploit this through emails that request urgent assistance, such as assisting with a payment or resolving an account issue. This sense of duty can override standard security checks, particularly in service-oriented roles.

Loss Aversion

People are more motivated to avoid losses than to secure gains. Attackers exploit this by suggesting that inaction could cause payroll delays, missed deadlines, or leadership dissatisfaction. In a cybersecurity breach, loss aversion often pushes employees to act quickly on fraudulent instructions, believing that a delay could have worse consequences.

Confirmation Bias

Recipients are more likely to believe and act on information that aligns with their existing expectations or recent discussions. In phishing campaigns, this might mean referencing ongoing projects or upcoming renewals. Such alignment makes malicious emails blend seamlessly into normal workflows, increasing the risk of unnoticed compromise.

Availability Heuristic

Information that is recent or emotionally charged is given greater weight in decision-making. Attackers capitalize on this by tying scams to current events, regulatory changes, or industry disruptions. These timely references make fraudulent emails feel relevant and credible, reducing skepticism and increasing click-through rates.

Social Proof

When people believe their peers have already acted, they are more likely to follow suit. In phishing, attackers forge CC lists, reply-all threads, or references to completed actions by “other managers” to create a false sense of consensus. This herd effect lowers individual caution and speeds compliance.

Anchoring

The first figure or deadline presented sets a mental benchmark for later decisions. In scams, attackers may open with a high invoice total or an immediate payment request, making subsequent changes seem minor and reasonable. This manipulation can lead to approval of inflated or fabricated amounts without proper review.

Reciprocity Bias

When people feel they have received something, even something minor, they are more inclined to give something back. Cybercriminals may send helpful documents, free reports, or supportive messages before making their actual request. This sense of obligation can be exploited to obtain credentials or authorize payments.

Scarcity Bias

Opportunities framed as limited-time or exclusive push recipients into rapid decision-making. In cybersecurity attacks, scarcity is often used to bypass verification steps, making employees click links or approve transfers before the supposed offer expires, thereby reducing the chance of second-guessing.

Halo Effect

Trust in a familiar brand, partner, or sender often spills over to the content of a message. In phishing, attackers replicate logos, signatures, and design elements from trusted entities. This perceived legitimacy lowers scrutiny, making recipients more likely to click links or open attachments that bypass technical filters.

These triggers often work in combination, with authority, urgency, and familiarity forming a potent sequence. Recognizing them with even a few seconds of verification through a trusted secondary channel can protect against multimillion-dollar losses.

Building Psychological Resilience Against Attacks

Building psychological resilience starts with deliberately slowing your response time to short-circuit an attacker's manipulation tactics. This approach requires both individual discipline and organizational culture change, including:

Following the simple STOP framework: Slow down, Think about the request, Observe any red flags, and only then Proceed. This methodical approach counters the urgency and authority cues that make social engineering effective.

Verifying the request via a second channel before moving money or sharing credentials. For instance, call the sender directly or use an internal ticket system. Attackers usually find it more difficult to fake both communication paths simultaneously, making cross-verification a strong (but not foolproof) defense.

Breaking the authority and urgency cues scammers rely on. Even a 30-second pause can significantly cut impulsive clicks.

Leaders play a crucial role in building this resilience culture. When managers celebrate a cautious question as much as a quick reply, employees treat vigilance as part of the job, not an obstacle. This psychological safety enables teams to resist manipulation attempts without fear of appearing inefficient. However, even the best intentions often fall short without proper training methodologies.

Why Traditional Security Awareness Falls Short

Annual security awareness training often produces knowledge without lasting behavioral change. This helps explain why human error continues to account for a significant share of successful breaches, as reported in recent industry analyses. Many programs rely on single-session presentations that are quickly forgotten, leaving employees unprepared for real-world threats.

Under cognitive load, professionals default to instinctive responses rather than recalling training, creating exploitable gaps. This disconnect between awareness and action remains a persistent weakness in organizational defenses.

Traditional, static courses also fail to account for how memory works; without regular reinforcement, most information is lost within days. Effective security education requires ongoing, scenario-based practice, reinforced through frequent, contextual coaching. Bite-sized learning and immediate feedback help convert knowledge into automatic, reliable responses. Until training shifts from occasional instruction to continuous engagement, organizations will remain vulnerable to preventable mistakes.

How Technology Can Support Human Psychology with Abnormal

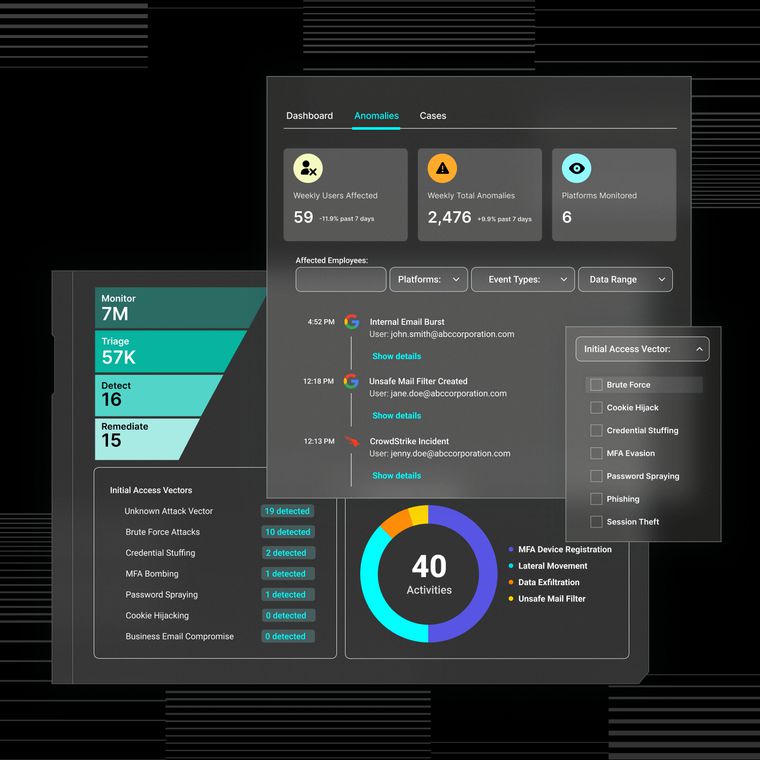

Attackers exploit human instincts faster than most people can react. Abnormal’s Behavioral AI creates a safety net by learning each user’s unique communication patterns and detecting subtle anomalies before authority bias, urgency, or familiarity triggers take hold. From spotting a single-digit change in a routing number to flagging tone shifts in an email thread, the platform stops manipulation without disrupting legitimate work.

For instance, the AI Security Mailbox and GenAI Phishing Coach turn every detection into a real-time micro-lesson, reinforcing security habits and building resilience over time. This behavioral approach closes the gap between human fallibility and machine precision, enabling employees to become an active defense layer.

Combining continuous, scenario-based training with behavioral AI ensures organizations are prepared for both technical and psychological threats. See how Abnormal can strengthen your defenses. Book a personalized demo today.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.