Applications of Behavioral AI in Threat Detection

Understand how behavioral AI in threat detection enhances visibility into attacker tactics and reduces false positives.

September 2, 2025

Traditional signature-based security tools cannot keep pace with modern threats. Zero-day exploits, polymorphic malware, and AI-driven social engineering easily bypass static defenses, while analysts lose valuable time chasing false positives. Behavioral AI closes these gaps by learning how users, devices, and vendors normally operate, then flagging deviations that indicate malicious activity.

This article explores five high-impact applications of behavioral AI in threat detection, including preventing business email compromise, detecting insider threats, uncovering lateral movement, safeguarding vendor relationships, and exposing coordinated attack campaigns.

Why Traditional Threat Detection Methods Miss Advanced Attacks

Traditional signature-based detection fails against modern threats because it only recognizes known attack patterns, leaving organizations exposed to new and evolving tactics. These systems match network traffic and files against databases of previously identified malware signatures, effectively catching yesterday's threats while missing today's attacks.

Zero-day exploits, polymorphic malware variants, and living-off-the-land techniques bypass signature defenses entirely. Attackers modify existing malware just enough to evade detection, while advanced persistent threat groups develop entirely new attack vectors without corresponding signatures. The time gap between discovering threats and distributing updates creates persistent detection windows that sophisticated adversaries exploit.

Static detection engines generate excessive false positives and negatives as signature databases expand. Security teams waste resources investigating benign activities while genuine attacks slip through undetected. These systems cannot analyze encrypted traffic, assess behavioral context, or understand subtle indicators distinguishing legitimate activity from malicious behavior.

Behavioral AI addresses these weaknesses by learning normal patterns of user, device, and application behavior. Instead of relying on known signatures, it identifies statistically significant deviations from established baselines in real time. This approach detects unknown threats while dramatically reducing false positives, providing security teams with actionable intelligence rather than alert noise.

That said, let’s understand the applications of behavioral Ai in threat detection.

Application 1: Anomalous Email Behavior Detection

Behavioral AI transforms email security by establishing communication baselines for organizations and detecting deviations signaling advanced threats. The system learns normal sender-recipient relationships, communication tone, and approval workflows during brief learning periods. When messages arrive, it compares them against live baselines to identify business email compromise, invoice fraud, and credential phishing that bypass traditional secure email gateways.

Traditional gateways rely on static rules and sender authentication, but once attackers control legitimate accounts, these checks pass without issue. This limitation explains why security leaders replace secure email getway solutions that fail against executive impersonation and subdomain spoofing.

Behavioral AI closes this gap through context-rich analysis. Here are the key behavioral indicators to monitor:

Unfamiliar Sender-Recipient Pairings: When emails arrive from people who never normally communicate or unusual recipients appear on sensitive threads, the AI recognizes relationship anomalies. Finance members receiving engineering contractor emails about wire transfers trigger immediate analysis.

Language Shifts and Tone Changes: The AI detects dramatic writing style changes from historical patterns. Natural language processing analyzes sentiment shifts, unusual urgency, and signature variations indicating account compromise or impersonation.

Workflow and Timing Violations: Organizations follow predictable approval chains and business rhythms. Payment requests skipping approvers or arriving at unusual weekend hours flag as potential fraud attempts requiring investigation.

Anomalous Attachments and Links: Sophisticated attackers hide malicious payloads within legitimate conversation threads. The AI examines entire chains for consistency, catching subtle domain changes or unexpected file types.

Organizations have prevented major vendor invoice scams when behavioral AI flagged last-minute bank account changes deviating from historical payment behavior. These incidents appear as single, high-confidence alerts rather than false positive floods, enabling remediation in minutes. This precision transforms email from attack vector into intelligent defense layer adapting to actual communication patterns.

Application 2: Insider Threat and Account Compromise Detection

Behavioral analysis extends beyond email to expose insider threats and account takeovers by learning each employee's normal digital fingerprint and surfacing subtle deviations in real time.

Distinguishing Threat Types Through Behavioral Patterns

Malicious insiders act with intent, negligent insiders make mistakes, and external actors hijack legitimate accounts, but all leave distinct behavioral traces. After initial learning, AI tracks dozens of risk signals across user activity patterns.

Anomalous login activity like unfamiliar devices, late-night access, or impossible travel between geographies provides immediate red flags. The system detects sudden spikes in file downloads or transfers to unsanctioned cloud storage, often indicating exfiltration attempts.

Language analysis reveals tone shifts or unusual collusion patterns through natural language processing. Role deviations present critical signals when engineers probe finance systems or contractors access repositories they never touch, triggering behavioral scoring alerts. Moreover, correlated signals generate high-confidence alerts instead of flooding teams with noise.

Application 3: Network Lateral Movement Detection

Behavioral AI proves equally powerful at identifying network-based attacks, detecting lateral movement the moment attackers deviate from normal patterns. After initial breaches, attackers hop system-to-system for privilege escalation, reconnaissance, and data staging. The AI continuously learns how users, services, and devices communicate, building living maps of sanctioned east-west traffic.

Once baselines establish, models correlate multiple weak signals indicating danger:

East-West Traffic Spikes: Normal operations create predictable segment communication patterns. Unusual cross-segment traffic like HR systems accessing engineering databases signals potential lateral movement that traditional firewalls allow.

Abnormal Authentication Patterns: Authentication tickets follow predictable lifecycles in healthy environments. The AI detects forged golden tickets or harvested legitimate tickets for replay attacks, identifying timing and frequency anomalies indicating credential abuse.

First-Time Sensitive Access: Employees typically access the same shares and databases repeatedly based on roles. Accounts suddenly connecting to sensitive shares they've never touched, especially after hours, flag potential reconnaissance or data staging for exfiltration.

Unusual Device Communication: Workstations rarely communicate directly, typically routing through servers. The AI recognizes compromised machines establishing peer-to-peer connections or legitimate tools used in unusual ways.

Traditional IDS/IPS tools miss these clues because signatures cannot capture infinite credential misuse possibilities. In enterprise deployments, self-learning AI has dramatically reduced investigation time, delivering single narrative alerts instead of dozens of isolated events. This precision means analysts focus on meaningful alerts, shrinking dwell time while preventing alert fatigue.

Application 4: Vendor and Supply Chain Attack Detection

Behavioral AI identifies vendor compromises and supply chain infiltrations by detecting minute deviations in trusted partner communications and software delivery patterns. Vendor email compromise hijacks partner accounts to send fraudulent invoices, while supply chain attacks plant malicious code in legitimate updates.

The system continuously models vendor communication and resource access, building dynamic relationship graphs surfacing anomalies in real time. When long-standing supplier emails arrive from atypical domains or request bank changes, AI compares actions against baselines and assigns risk scores. The same engine monitors API calls and build pipelines, flagging unusual file hashes or traffic patterns.

Key anomalies detected include sudden bank account changes deviating from years of consistent details, atypical domain names mimicking partners through character substitution, unusual vendor access patterns like after-hours data pulls, and abnormal file types from predictable sources suddenly containing unexpected executables.

Major manufacturers have avoided significant losses when behavioral AI blocked payment redirect requests from fake domains bypassing perimeter defenses. Contextual, relationship-aware alerts enable analysts to investigate genuine threats rather than chase false positives, closing vendor detection gaps across entire supply chains.

Application 5: Real-Time Attack Campaign Recognition

The most sophisticated behavioral AI application connects dots across email, network, and SaaS telemetry to surface coordinated attack campaigns in real time, providing context to shut them down before spreading.

Instead of treating alerts in isolation, models continuously learn normal patterns for users, devices, and applications, then link deviations into single storylines. Unusual sender relationships correlate with rogue service calls and unexpected traffic patterns.

The AI profiles insider behavior to create connections. For instance, odd after-hours data pulls flagged by malicious insider detection immediately correlate with the same user's privilege escalation and file uploads, rather than generating separate tickets. Platforms visualizing these chains trim investigation time by surfacing only high-confidence, campaign-level alerts.

Analysts spend minutes, not hours, piecing evidence together, slashing mean time to respond and limiting attacker dwell time. This collaborative model lets human expertise validate AI findings and automate containment. The approach transforms security operations from reactive alert chasing to proactive threat hunting, powered by complete, real-time adversary playbook views.

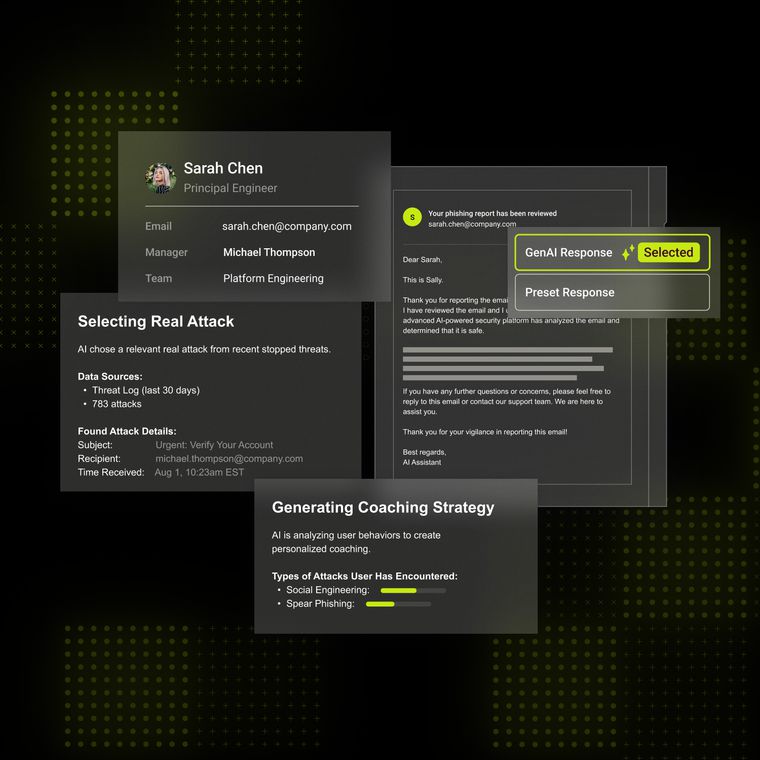

How Abnormal's Behavioral AI Enhances Threat Detection

Abnormal's behavioral AI learns complete environments, then surfaces only threats that matter. The platform continuously models every user, device, application, and vendor relationship to stop sophisticated attacks while eliminating noise.

Behavioral Graph Intelligence builds dynamic relationship graphs mapping employee, vendor, and application interactions, making deviations like sudden bank account changes immediately visible. Language-Aware Threat Detection uses advanced natural language processing analyzing tone, sentiment, and intent across emails and collaboration tools, catching business email compromise that secure gateways miss.

Cross-Channel Coverage extends identical AI models across email, Slack, Zoom, Teams, and Google Workspace, providing unified visibility into multi-vector campaigns without separate point solutions. Zero infrastructure overhead connects through cloud APIs for rapid installations preserving MX records and eliminating inline appliance maintenance.

Customers layering Abnormal's behavioral AI on existing controls report dramatically fewer phishing incidents and significantly faster SOC analysis. Alerts arrive enriched with actor context, intent analysis, and risk scores, enabling immediate action instead of log correlation.

There's a reason organizations are moving beyond static signatures to address threat detection challenges. Ready to transform your threat detection with behavioral AI? Get a demo to see how Abnormal identifies and stops sophisticated attacks that bypass traditional security tools.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.