Why AI Social Engineering Attacks Are the Newest Threat to Email Security

Understand AI-enhanced social engineering, key attack types, and actionable steps to protect your organization. Read this article to know more.

July 3, 2025

AI-powered social engineering represents the most significant evolution in cyberthreats since the advent of email. Unlike traditional phishing campaigns with obvious red flags, artificial intelligence enables attackers to create sophisticated, personalized attacks on an unforeseen scale and with precision.

This guide provides CIOs with everything needed to understand the threat landscape, implement robust defenses, and build organizational resilience against AI-enhanced social engineering attacks. The strategies outlined here have been proven effective across enterprise environments and represent current best practices for defending against this evolving threat.

What Makes AI-Powered Social Engineering Different

AI-powered social engineering fundamentally transforms cyberattacks from obvious, template-based campaigns into sophisticated, personalized operations that exploit human psychology with machine precision. Traditional phishing relied on volume and obvious mistakes; AI attacks, on the other hand, leverage quality and subtle manipulation.

These attacks operate across multiple communication channels simultaneously, including email, SMS, social media, collaboration tools, video platforms, and voice calls. What makes them particularly dangerous is their ability to adapt in real-time, learn from failed attempts, and create thousands of customized attack vectors within minutes.

Consider this scenario: An employee receives an email from their "CEO" about an urgent acquisition, followed immediately by a text message with additional "confidential" details, then a phone call using AI voice cloning to create pressure for immediate wire transfer authorization all before traditional verification processes can be completed.

The sophistication extends beyond communication. AI systems now scrape LinkedIn profiles, analyze corporate announcements, monitor social media activity, and even parse publicly available meeting notes to craft messages that reference specific projects, deadlines, and internal terminology with uncanny accuracy.

The Four Pillars of AI-Enhanced Social Engineering

Understanding how attackers leverage AI across different attack vectors is crucial for building effective defenses. Modern threat actors have leveraged four traditional social engineering techniques with artificial intelligence.

These include:

1. Phishing at Unprecedented Scale

Large language models have transformed generic phishing campaigns into mass customization engines. AI automatically collects and analyzes public data to create perfect emails, texts, and social posts that mention specific job responsibilities, current projects, local events, or recent business activities.

Each message features flawless grammar, unique wording, and personalized context that bypasses rule-based security systems designed to catch obvious mistakes, and the volume is staggering. A single AI system can generate thousands of unique, personalized phishing messages per hour while maintaining quality that human operators could never achieve at scale.

Modern AI phishing campaigns coordinate across multiple channels simultaneously. An attack might begin with a subtle email mentioning a legitimate business contact, followed by SMS messages with "additional context," then escalate to fake LinkedIn messages or collaboration tool notifications, all referencing the same fabricated scenario with perfect consistency.

2. Business Email Compromise (BEC)

AI models analyze months of executive communication patterns, learning writing styles, favorite phrases, signature formats, and decision-making language so convincingly that finance teams struggle to distinguish authentic from synthetic communications. When targets question suspicious invoices or transfer requests, attackers escalate by using deepfake voice calls or video clips that show the "CFO" personally approving transactions.

Security experts report exponential growth in incidents where synthetic audio convinces finance staff to approve six-figure payments. Traditional secure email gateways often miss these attacks because they contain no malware and exhibit only sophisticated social engineering cues that appear completely normal within existing email flow patterns.

3. Spear Phishing

AI examines LinkedIn updates, GitHub activity, corporate announcements, and even leaked internal communications to craft targeted attacks that reference yesterday's team meetings, upcoming contract renewals, or specific project challenges. A single compromised partner account can launch dozens of ultra-personalized attacks within an organization, each tailored to individual targets based on their role, recent activities, and communication patterns.

Since these emails originate from trusted addresses and use internal terminology, static reputation scoring and traditional content analysis provide minimal protection. The spear phishing attacks often reference legitimate business processes, making them nearly impossible to distinguish from authentic internal communications without advanced behavioral analysis.

4. Deepfakes and Real-Time Voice Synthesis

Synthetic media tools now create real-time video calls where fake executives appear to give urgent instructions, or produce voicemail deepfakes that bypass traditional phone verification procedures. These AI-generated media have become impossible to distinguish from genuine interactions using standard detection methods.

The technology has reached a point where attackers can impersonate anyone whose voice appears in publicly available recordings, such as earnings calls, conference presentations, or social media videos. Standard verification scripts fail because the impersonation occurs on the very communication channel organizations typically rely on for authentication.

Real-time voice synthesis now enables live phone conversations where attackers respond naturally to questions while maintaining perfect vocal impersonation. This capability renders traditional "callback verification" ineffective when the callback number has been compromised or spoofed.

How AI Transforms Attack Economics and Scale

Artificial intelligence has fundamentally altered the economics of social engineering by eliminating technical barriers, reducing operational costs, and enabling unprecedented attack scales that render traditional defensive approaches obsolete.

Democratization of Advanced Attack Capabilities

The most significant change is accessibility. Criminal marketplaces now offer user-friendly platforms that package sophisticated attack tools into simple dashboards requiring no technical expertise. Attackers can purchase "Social Engineering as a Service" packages that include AI-generated content, voice synthesis capabilities, deepfake video generation, and coordinated multi-channel delivery—all for less than $100 per month.

Specialized tools designed to remove ethical constraints from language models optimize phishing content generation, enabling attackers to bypass safety measures built into commercial AI systems. This democratization has led to an exponential growth in social engineering incidents across all organizational sectors.

Scale and Speed Advantages

AI attacks leverage four critical capabilities that dramatically increase success rates compared to traditional human-operated campaigns, which include the following:

Enhanced Data Mining: Algorithms analyze social media profiles, data breach information, corporate announcements, and publicly available documents to craft messages referencing specific business trips, pending invoices, project deadlines, or internal terminology.

Cross-Platform Coordination: Attackers coordinate sophisticated campaigns across email, SMS, voice, video, and social media platforms simultaneously, creating consistent narratives that build credibility through multiple touchpoints.

Real-Time Adaptation: AI systems monitor target interactions and dynamically adjust messaging strategies, timing patterns, and communication approaches based on victim behavior, achieving conversion rates that exceed traditional human-operated schemes.

Continuous Learning: Machine learning algorithms analyze successful attacks to refine tactics, improve personalization, and identify new vulnerability patterns across target organizations.

Overall, AI has redefined the economics and scale of social engineering, making advanced attacks cheaper, faster, and more accessible than ever. Organizations must adopt AI-powered defenses to counter these evolving threats effectively.

Actionable Defense Strategies Against AI-Enhanced Social Engineering

Defending against AI-enhanced social engineering requires a fundamentally different approach than traditional cybersecurity measures. Organizations must implement comprehensive controls that address both technological vulnerabilities and human psychology while maintaining operational efficiency.

Here are six actionable defense techniques to implement across organizations as effective measures against AI-enabled social engineering:

Deploy Phishing-Resistant Multi-Factor Authentication

Hardware security keys and passkeys are among the most effective defenses against credential theft, which is often the goal of AI-driven phishing attacks. Organizations should enforce phishing-resistant authentication methods, such as FIDO2-based hardware keys for all users accessing sensitive systems, and eliminate SMS-based authentication due to its susceptibility to MFA bypass techniques.

To strengthen access security further, organizations should configure conditional access policies that mandate phishing-resistant MFA for sensitive actions and enforce device trust policies that validate the presence and integrity of hardware keys. Even advanced AI-generated attacks cannot circumvent cryptographic authentication, making it a highly reliable safeguard against credential-based social engineering.

Strengthen Email Authentication Infrastructure

Email security infrastructure forms the foundation of anti-impersonation defenses by blocking the fake domains that generative AI uses to scale phishing attacks. Organizations must configure SPF records with "-all" hard fail policies for all domains, implement DKIM signing with minimum 2048-bit keys, and set DMARC policies to "p=reject" for all organizational domains to prevent unauthorized sending.

Advanced configuration includes implementing BIMI (Brand Indicators for Message Identification) for visual sender verification, configuring domain-based message authentication for all subdomains, and establishing real-time DMARC failure notifications for immediate attack detection. Organizations must disable legacy SMTP authentication protocols entirely and verify that MX records point exclusively to authorized mail servers.

Operational requirements include conducting quarterly DNS security audits, maintaining comprehensive inventories of all organizational domains and subdomains, and establishing secure procedures for domain transfers and DNS changes. IT staff training on email authentication troubleshooting ensures rapid resolution of legitimate sending issues while maintaining security posture. Weekly monitoring of DMARC reports provides visibility into impersonation attempts and unauthorized sending activities targeting organizational domains.

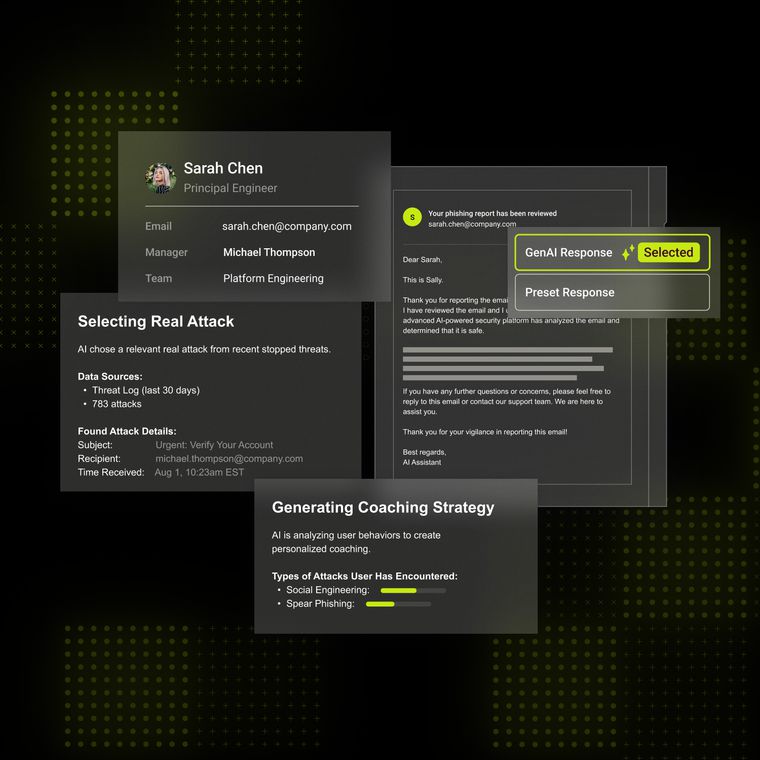

Deploy Behavioral AI-Enhanced Email Security

Traditional email filters cannot detect AI-generated social engineering attacks that contain no malware and use perfect grammar with personalized content. Organizations must layer behavioral AI detection on top of traditional email security systems to establish baseline communication patterns and detect subtle anomalies typical of AI-enhanced attacks.

Modern solutions require API-based integration to protect Microsoft 365, Google Workspace, and collaboration platforms without impacting performance. Cloud-native architectures analyze communication patterns in real-time while incorporating threat intelligence feeds for emerging attack indicators. These systems establish baseline communication patterns for all users, detect anomalies in sender behavior and recipient interactions, and analyze linguistic patterns to identify AI-generated content.

Behavioral analysis capabilities must monitor cross-platform communication for coordinated attack campaigns while implementing machine learning models that adapt to new attack techniques. Organizations require automated response capabilities for confirmed threats, comprehensive incident response workflows for suspicious communications, and detailed dashboards for security team monitoring and investigation.

Implement Executive Protection and Anti-Impersonation Controls

Executive impersonation represents the highest-risk attack vector in AI-enhanced social engineering, requiring specialized protection measures that address display name spoofing, urgency manipulation, and multi-channel verification. Organizations must create specific rules that block messages with fake executive display names, while implementing fuzzy matching algorithms to catch variations and typos that traditional filters miss.

Urgency and financial request detection systems must flag messages containing urgent financial language combined with external senders, detecting requests for wire transfers, payment changes, or confidential information. Content analysis for emergency language patterns helps identify artificial urgency tactics while workflow requirements ensure all financial communications follow proper authorization procedures.

Multi-channel verification requires callback verification for all financial requests exceeding defined thresholds, voice biometric verification for high-value transactions, and secure channels for executive communication verification. Advanced protections include voice authentication systems for critical business communications, video call security protocols using secure meeting platforms, and comprehensive procedures for detecting and responding to deepfake technology communications that impersonate executive leadership.

Conduct Advanced AI-Generated Phishing Simulations

Traditional phishing simulations using template-based approaches cannot prepare organizations for AI-generated attacks that leverage the same sophisticated models used by malicious actors. Organizations must deploy simulation platforms that create personalized attack simulations based on individual user profiles while implementing multi-channel campaigns across email, SMS, and voice communications.

Modern simulation platforms generate content reflecting current threat intelligence and attack trends, targeting different user groups with role-specific attack scenarios that reference specific job functions and current projects. Monthly simulations with varying sophistication levels measure click rates, credential submission behaviors, and reporting effectiveness while tracking improvement metrics over time.

Personalization capabilities must create industry-specific scenarios relevant to organizational operations, implement seasonal campaigns reflecting current events and business cycles, and develop executive-targeted simulations for leadership awareness. Integration with training programs provides immediate feedback for simulation failures, delivers just-in-time training based on individual vulnerability patterns, and creates remedial programs for repeated failures. Comprehensive metrics track organizational resilience scores, measure security awareness training effectiveness across user groups, and generate executive dashboards showing organizational risk posture.

Establish Dual-Channel Verification for Critical Operations

Single-channel verification procedures fail against AI-enhanced social engineering attacks that can impersonate trusted sources across multiple communication platforms simultaneously. Organizations must establish dual-channel verification for all critical operations, requiring voice verification for wire transfers exceeding $10,000, secure chat confirmation for payroll and banking changes, and ticketing system requirements for vendor payment modifications.

Financial transaction controls must include manager approval workflows for high-risk operations, while data protection procedures require dual authorization for personal data breach prevention, verification for cloud storage sharing changes, and approval workflows for system access modifications. Communication channel security requires designated secure platforms for sensitive business discussions, encryption requirements for confidential information sharing, and backup communication channels for emergency scenarios.

Operational implementation involves training finance teams on verification procedures and escalation protocols while creating user-friendly verification tools that maintain business efficiency. Automated verification reminders for high-risk transactions ensure compliance while clear procedures for handling verification failures prevent operational disruption. Technology integration deploys workflow automation tools that enforce verification requirements, integrates procedures with existing business applications, and creates comprehensive audit trails for all verification activities and approvals.

Defend Against Social Engineering Tactics with Abnormal

Abnormal's AI-powered behavioral analysis provides superior defense against sophisticated social engineering attacks that traditional security awareness training cannot address alone. Organizations benefit from reduced false positives, enhanced detection accuracy, and adaptive protection that evolves with threat actors that leverage AI to create increasingly convincing attacks targeting personal data and sensitive information.

Discover how the platform can help you create a defense strategy against social engineering by requesting a demo.

Related Posts

Get the Latest Email Security Insights

Subscribe to our newsletter to receive updates on the latest attacks and new trends in the email threat landscape.